Many of us see Google Search as a cross between a massive digital library and the world’s biggest Yellow Pages. Whether you want to know what time a soccer game comes on, when George Washington was born or where to get Jamaican food locally, the quickest and easiest way to answer simple inquiries is to Google it.

But what happens when you ask questions that are more subjective, nuanced and may need much more context, such as “Why did the Troubles happen in Ireland?” or “Why was the Civil War fought?” or “What is critical race theory?” Dr. Safiya Noble, an associate professor at the UCLA Department of Information Studies, warns that we are handing too much of our knowledge discovery over to Big Tech platforms like Google.

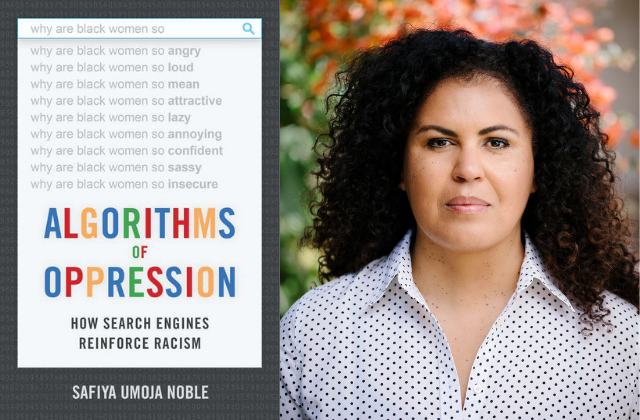

Dr. Noble co-founded (along with Dr. Sarah T. Roberts) the Center for Critical Internet Inquiry at UCLA. The center investigates the social impact of digital technologies on the broader public good. She also wrote the book “Algorithms of Oppression: How Search Engines Reinforce Racism,” which explains how racist and sexist ideas get encoded into Google search results.

In this interview with Colorlines, Dr. Noble explained how search engines actually work, what users are getting wrong when they think of Google as a “fact-checker,” the ways in which Google and other platforms play a role in the spread of misinformation and propaganda, what societal problems technology can’t fix, and more.

This interview has been edited for length and clarity.

The majority of internet users use Google as their preferred search engine. But most people wouldn’t be able to articulate how it works. How do search engines work?

One thing that many people don’t understand is that search engines are multinational advertising platforms. The way they make money is by prioritizing people, companies, organizations, industries, etc. that pay the most to optimize their content and put it on the first page of the search results. There’s a 24/7 auction to outbid each other to link specific keywords to their content. It also works by optimizing websites with keywords that Google can index.

What are users getting wrong when they look at Google as an arbiter of truth, or a big digital library?

Unlike social media—where people know what content they see is influenced by who they follow, what they’ve clicked on in the past, etc.—people think of search engines as neutral, objective fact-checkers, reliable, and curated by experts. Part of that is because in the early history of the web, the precursor to search engines were virtual libraries where experts organized things so you could find them easily. Another part is because when they search for banal things—where is the closest Starbucks, what time does the mall close, etc.—these types of facts are corroborated. The problem is when you ask the search engine a social question that is subjective and might have many points of view, you’re more likely to accept that what you find on the first page is the facts. This is why it’s so important for us to keep our eyes on search engines, because it’s everyday technology that everyone from parents to teachers to newscasters use. It can have quite devastating consequences because Google Search is rife with disinformation and propaganda.

In your book “Algorithms of Oppression,” you talk about how pre-existing racist and sexist ideas get encoded into the search results. Could you give an example of this?

One of the stories I write about in the book is how in his manifesto, Dylan Roof left a post about how he was trying to make sense of the news-reporting about the murder of Trayvon Martin by George Zimmerman. He starts doing Google searches and one of the searches he does is “black on white crime.” “Black on white crime” is a racist red herring, as the majority of violent crimes are intraracial experiences. But the phrase tends to appear in white supremacist and Neo-nazi sites, and of course he was led to those sites. Many of those sites are what Jesse Daniels calls “cloaked websites”- sites that look like legitimate news sites but are actually created white supremacist organization. Roof said he learned about all the violent murders of white people by Black people, he learned about the “Jewish problem,” and claims he became fully “racially aware.” This shows how Google Search had radicalized him; how it radicalizes people by edging them towards rightwing, fascist, racist ideological content. So as people are looking to understand racial issues, they are often led to things that are click-bait and hateful content. This isn’t true for only Google—it happens on social media and other platforms like Youtube. But this is one example that shows how these platforms can be dangerous to society.

What do platforms like Google need to do to stop this type of misinformation, disinformation, and radicalization from happening? Particularly since, although these things hurt everyone, the people who take the brunt of it are marginalized groups?

I think that these questions of impact aren’t the priority for them. The platforms are doing what they are designed to do, and their company imperative is to return value to shareholders at all cost. Journalists have written quite extensively about how Google’s own engineers don’t fully know how to fix their algorithms. What they do is when there is a public relation crisis about some terrible result, they just down-rank that content. So I’m not sure a platform designed for one thing can pivot. Is it safe for us to have one company that sets so much of the agenda about what we know? Should it and a handful of other companies be able to track us demographically and psychographically so they can manipulate what we know, while we displace and defund more democratic knowledge institutions?

I think the tech sector is eroding our ability to have the space and time to critically think, and it’s instead enculturating us to get quick and easy answers that are driven by economic power interests. The question for me is how do we not have monopolies have a lock on our knowledge and information infrastructure? For me, it’s how do we have legitimate news, information, knowledge and education institutions and resources compete to be more viable and valuable than advertising companies? If the tech sector alone paid its fair share of taxes, we could make education high quality, affordable and accessible for everyone. Rather than making these things available, we just turn to Google. We have so many slow-knowledge endeavors and we want to value those things while putting Google in a more narrow band of tools and resources we have at our fingertips.

Is there any part of the problem that can’t be solved by “better” technology or algorithms?

I think the problem with social media, search and the internet more broadly is that they are profoundly distorting. Some of these technologies are often predatory- used to weaponize, inflame and incite hate instead of educate, because it gets more engagement. So platforms are implicated in trafficking in hate on the internet and in real life. Right now there’s a national campaign afoot to discredit and troll those who talk about the historical and present conditions of racism in our country. Rather than deploy that energy to eradicate racism, there’s a massive campaign to destroy the lives of people who try to provide the evidence of these long-standing social conditions; how society has worked, the profound legacies of enslavement, discrimination, the roll back of civil rights, etc. Pointing out the facts is an important step into how we solve these issues and enfranchise everyone in our society. Shooting the messengers who talk about these problems are not going to make these problems go away.

Joshua Adams is a Staff Writer for Colorlines. He’s a writer, journalist and educator from the south side of Chicago. You can follow him @JournoJoshua